Algorithmic Policing in Canada Explained

FAQ

This document provides an explainer to a new report from Citizen Lab and the International Human Rights Program at the University of Toronto’s Faculty of Law on the use and human rights implications of algorithmic policing practices in Canada.

- Are Canadian law enforcement agencies currently using, developing, or considering adopting algorithmic policing technologies?

- What algorithmic policing technologies are being used by, developed or under consideration by, or currently in the possession of which law enforcement agencies in Canada?

- What are the human rights and legal implications of using algorithmic policing technologies?

- How does Canada compare to other jurisdictions in terms of how predictive policing and algorithmic surveillance tools are being used?

- Are law enforcement agencies aware of the dangers of using these tools? If so, how do they justify their use?

- Which communities tend to be the most negatively impacted by these technologies?

- What do affected communities say about the use of these technologies?

- What do affected communities say about the use of these technologies?

- How might the findings of how these technologies are currently used provide insight into how they might be used in the future?

- What can policy- and lawmakers do to reverse or provide remedy to the use of these technologies?

On September 1, 2020, the Citizen Lab and the International Human Rights Program at the University of Toronto’s Faculty of Law released a report that investigated the use and human rights implications of algorithmic policing practices in Canada. This document provides a summary of the research findings and questions and answers from the research team.

We examined two broad categories of algorithmic policing technology: predictive policing technology (including both location-focused and person-focused algorithmic policing), and algorithmic surveillance technology.

Predictive Policing Technologies

Predictive policing technologies draw inferences through the use of mass data processing in the hopes of predicting potential criminal activity before it occurs. Such methods may be location-focused, which attempts to forecast where and when criminal activity may occur, or person-focused, which attempts to predict a given individual’s likelihood of engaging in criminal activity in the future.

We examined two kinds of predictive policing technologies in this report:

- Location-focused algorithmic policing technology purports to identify where and when potential criminal activity might occur, using algorithms programmed to find correlations in historical police data in order to attempt to make predictions about a given set of geographical areas. Examples: VPD GeoDASH, PredPol (USA).

- Person-focused algorithmic policing technology relies on data analysis in order to attempt to identify people who are more likely to be involved in potential criminal activity or to assess an identified person for their purported risk of engaging in criminal activity in the future. Examples: SPPAL (SK), Chicago SSL, Operation LASER (LA).

Algorithmic Surveillance Technologies

Algorithmic surveillance policing technologies do not inherently include any “predictive” element, but instead provide police services with sophisticated generalized surveillance and monitoring functions. These technologies automate the systematic collection and processing of data (such as data collected online or images taken from physical outdoor spaces). Some algorithmic surveillance technologies may process data that is already stored in law enforcement or police files, but in a new way (e.g., repurposing mug-shot databases as a data source for facial recognition technology).

The types of algorithmic surveillance technologies examined in this report include:

- Automated licence plate readers (ALPR) use pattern recognition software embedded in cameras to scan and identify the license plate numbers of parked or moving vehicles. ALPRs enable surveillance by systematically collecting data in bulk, such as the date, time, and geolocation of all scanned vehicles, and the registration information (i.e., drivers’ identities) associated with those vehicles.

- Social media surveillance software mines and analyzes personal information and related data from social media platforms. That data is used to infer or predict behavioural patterns, future activities, future events, or relationships and connections among and between users and other people.

- Facial recognition is a biometric identification technology that uses algorithms to detect specific details about a face, such as the shapes of and distances between certain facial features, then uses a mathematical representation of those details to identify or match the same or similar faces in a facial recognition database. Facial recognition technology may be used on photos, videos, or on people in person, such as mall-goers or attendees at a protest.

- Social network analysis relies on statistics and data visualization purportedly to reveal social connections between people in particular networks, and how they influence each other, such as in the context of alleged gang membership or organized crime.

Are Canadian law enforcement agencies currently using, developing, or considering adopting algorithmic policing technologies?

The research conducted for this report found that multiple law enforcement agencies across Canada have started to use, procure, develop, or test a variety of algorithmic policing methods. These programs include using and both developing predictive policing technologies and using algorithmic surveillance tools. Additionally, some law enforcement agencies have acquired tools with the capability of algorithmic policing technology, but they are not currently using that capability because, to date, they have not decided to do so.

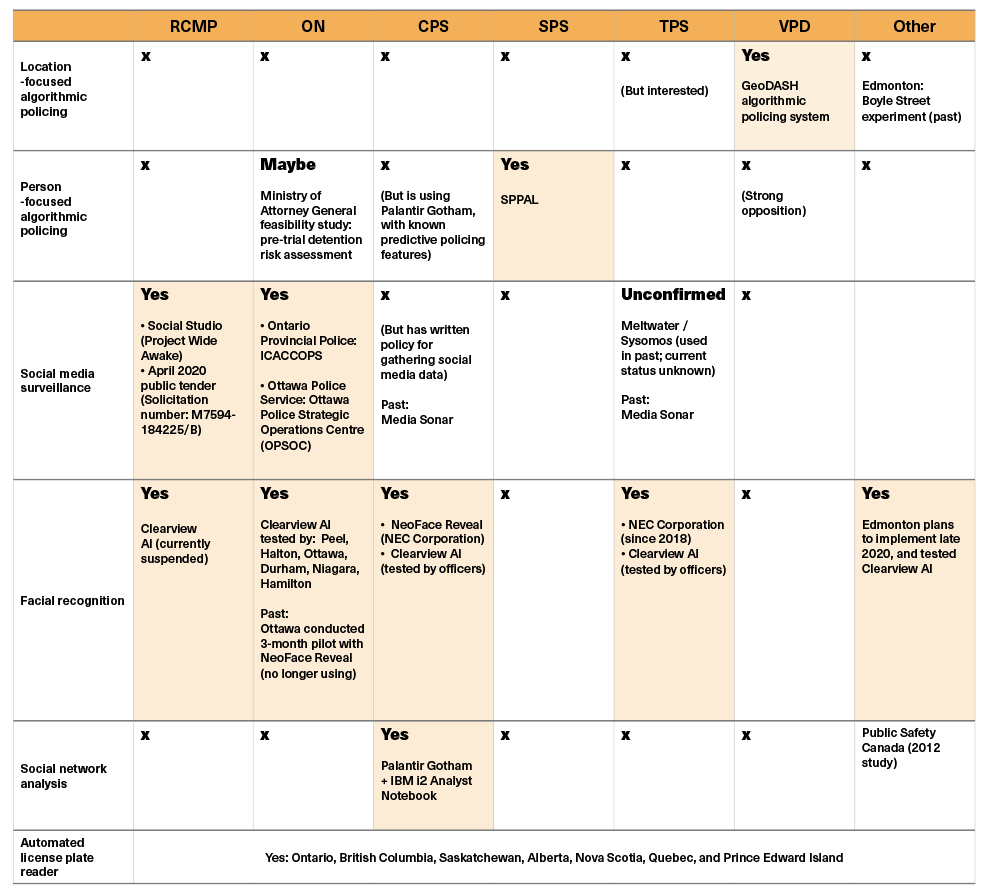

What algorithmic policing technologies are being used by, developed or under consideration by, or currently in the possession of which law enforcement agencies in Canada?

Location-Focused Algorithmic Policing Technologies

- The Vancouver Police Department (VPD) uses a program known as the GeoDASH algorithmic policing system (APS). The GeoDASH APS predicts the top six areas in Vancouver (three 100 square metre blocks and three 500 square metre blocks) where a break-and-enter is likely to happen within a given two-hour period throughout the day. It was created collaboratively between in-house staff at the VPD, a consortium of academics, and the company Latitude Geographics.

- The Toronto Police Service has collaborated with Environics Analytics since at least 2016, a data analytics company, to engage in data-driven policing, and has access to IBM’s Cognos Analytics and Statistical Package for the Social Sciences software, which enables data mining and location-based predictive modelling. However, the TPS has no plans to implement predictive policing in the immediate future, and has not engaged with any technology vendors for that purpose. The TPS may consider implementing location-focused algorithmic policing in the future, if they have the resources and if there is alignment with federal and/or provincial governance strategies, among other factors.

Person-Focused Algorithmic Policing Technologies

- The Calgary Police Service (CPS) uses Palantir Gotham, a product from Palantir Technologies, to integrate various data sources for analysis, but not for the software’s algorithmic and predictive policing capabilities (such as integrating social media content, email and telecommunications information, financial records, and credit history). However, the CPS stores in Palantir individuals’ information about physical characteristics, relationships, interactions with police, religious affiliation, and “possible involved activities,” in addition to using Palantir to map out the location of purported crime and calls for services. Palantir Technologies has been closely associated with controversial predictive policing programs in the United States, and media reports indicate the company is preparing to ramp up operations in Canada.

- The Saskatoon Police Service (SPS) partnered with the University of Saskatchewan and the Government of Saskatchewan to form the Saskatchewan Police Predictive Analytics Lab (SPPAL). The SPPAL’s current project is unique in that it claims to focus on preemptively identifying potential victims, such as missing youth. The SPPAL intends to expand the scope of its algorithmic work in the future to address other safety concerns and community issues such as repeat and violent offenders, intimate partner violence, the opioid crisis, and individuals with mental illness in the criminal justice system. The SPPAL approach may be considered an algorithmic extension of the Hub model of community safety, which aims to identify “at risk” individuals and connect them with needed social support, to intervene before the criminal justice system is involved.

- The Ontario Ministry of the Attorney General has begun to explore the use of a pre-trial risk assessment tool for use in pre-trial detention (i.e., bail) decisions, and has previously completed a feasibility study of such tools.

- In the corrections context, researchers at the University of Saskatchewan are conducting a machine learning project based on, and envisioned to replace, the Level Service Inventory – Ontario Revised (LSI-OR). The LSI-OR is a statistical risk assessment instrument that is used to carry out risk and needs assessments every six months for “all adult inmates undergoing any institutional classification or release decision, for all young offenders both in secure and open custody and for all probationers and parolees.”

Algorithmic Surveillance Technologies

- Automated licence plate readers (ALPR): ALPRs are mounted on police vehicles and/or highways and used by law enforcement in provinces across Canada, including Ontario, British Columbia, Saskatchewan, Alberta, Nova Scotia, Quebec, and Prince Edward Island.

- Social media surveillance software: Social media surveillance technology has been used by the Toronto Police Service, Calgary Police Service, the Ottawa Police Service, and the RCMP. The RCMP also issued a public tender in April 2020 for more advanced social media surveillance capabilities. Such technology, such as the London, ON-based Media Sonar and tools used for the RCMP’s “Project Wide Awake,” have been tied to law enforcement surveillance of civil liberties movements such as protests for Indigenous rights or racial justice, raising concerns for the use of more powerful, algorithm-driven versions of such tools. The Ontario Provincial Police may also be surveilling private chat rooms through a tool known as the “ICAC Child Online Protection System” (ICACCOPS).

- Facial recognition: The Calgary Police Service and Toronto Police Service use facial recognition technology from NEC Corporation, comparing photos and drawings of unidentified individuals to photos in pre-existing mug-shot databases. The Ottawa Police Service conducted a three-month pilot with the facial recognition product, NeoFace Reveal, but discontinued use. The Edmonton Police Service, York Regional Police, and Peel Regional Police Service have all announced plans to adopt facial recognition systems. In February 2020, multiple police agencies across Canada were found to have used or tested the controversial facial recognition tool Clearview AI, which built its technology by scraping 3 billion images from the Internet, specifically people’s photos, without their consent.

- Social network analysis: The Calgary Police Services engages in algorithmic social network analysis using a combination of Palantir Gotham and IBM’s i2 Analyst Notebook.

Note: An ‘x’ does not necessarily indicate confirmation that the law enforcement authority is not using a particular technology, but may instead mean only that the authors did not find evidence that definitively indicates that the service is using that technology.

What are the human rights and legal implications of using algorithmic policing technologies?

The legal and policy analysis conducted for this report found that the use of algorithmic policing technologies by law enforcement can raise many potential constitutional and civil liberties violations under the Canadian Charter of Rights and Freedoms and international human rights law. In particular, the analysis identified a number of issues related to the use of invasive forms of surveillance of personal data and mass data, algorithmic and systemic bias, discriminatory impacts, lack of transparency, and due process concerns. In some ways, the use of algorithmic policing technology is fundamentally incompatible with constitutional and human rights protections, particularly concerning rights relating to liberty, equality, and privacy. Specifically, the use of algorithmic policing technologies acutely endangers the following human rights:

- the right to privacy, through issues such as indiscriminate surveillance, eroding reasonable expectation of privacy in public and online spaces, the accuracy of data inferences, and data-sharing between law enforcement and other governmental agencies;

- the rights to freedom of expression, peaceful assembly, and association, through issues such as undermining anonymity of the crowd and targeting social movements and marginalized communities;

- the right to equality, through issues such as algorithmic bias perpetuating discriminatory feedback loops, heightened data visibility of socio-economically disadvantaged individuals, and math-washing systemic discrimination curtailing possibilities of structural reform to address root issues;

- the right to liberty and to be free from arbitrary detention, through issues such as generalized statistical inferences supplanting individualized suspicion, and risks of automation bias; and

- the right to due process and to a remedy, through issues such as lack of transparency, accountability, and oversight mechanisms, such as notice and disclosure requirements.

How does Canada compare to other jurisdictions in terms of how predictive policing and algorithmic surveillance tools are being used?

While the use of algorithmic policing technologies appears to be expanding at present, the use of this type of technology does not appear to be widespread yet in Canada, even if the factual record on this point is not definitive. The relatively low level of adoption of predictive policing technologies by Canadian police services, compared to jurisdictions such as the United States and United Kingdom, may be the result of a number of potential factors, including policymakers’ and law enforcement agencies’ cautious approaches to adopting or integrating controversial algorithmic technologies; observations of and a desire to avoid the controversies associated with law enforcement use of such technologies in the United States; or budgetary or resource constraints that have limited the procurement or use of these technologies even where there is interest in adopting them.

Adoption by Canadian law enforcement agencies is more widespread when it comes to algorithmic surveillance technologies, compared to adoption of predictive policing technologies. For example, numerous police services across several provinces have established, piloted, tested, or intend to implement facial recognition systems. Automated license plate recognition has been in place throughout multiple provinces for years, and social media surveillance tools have been prominently deployed by the Calgary Police Service, Toronto Police Service, and RCMP. The RCMP is also currently seeking further advanced social media surveillance capabilities, including technology that involves a proprietary or custom algorithm and will enable expansive access to many categories of online spaces, including social media networks, gaming platforms, darknet websites, photo and video sharing sites, and location-based services.

We must reiterate, however, that our research findings were limited by the lack of publicly available information and difficulty in freedom-of-information request processes, regarding the use of algorithmic policing technologies by all police services and law enforcement agencies across Canada. This means it is possible that there are algorithmic surveillance technologies or predictive policing technologies being used, developed, or considered by law enforcement agencies in Canada, which are not covered in this report and are unknown to the public.

Are law enforcement agencies aware of the dangers of using these tools? If so, how do they justify their use?

The law enforcement representatives we interviewed for this project demonstrated that they are aware of human rights concerns with algorithmic policing technologies and of the potential dangers to historically marginalized groups. They have not necessarily sought to justify the use of such tools in the face of these concerns and dangers, but rather, have either decided not to use particular tools at all, or have implemented measures that they consider sufficient to mitigate or prevent the potential negative impacts. For example, the VPD is strongly opposed to the use of person-focused predictive policing technology, and views programs in the United States as cautionary tales to be avoided. At the same time, the VPD has designed its GeoDASH algorithmic policing system with certain features intended to safeguard against discriminatory outcomes, such as exclusionary zones and meetings with their community policing unit.

The SPPAL is also aware of some of the dangers of algorithmic policing, particularly involving algorithmic transparency and reliability. To address this, the SPPAL chose to develop its algorithmic policing technology entirely in-house for the time-being, to avoid issues associated with proprietary software, and to ensure full control over the technology and the ability to open it up to independent review or be more responsive to criticism and new data.

Which communities tend to be the most negatively impacted by these technologies?

While algorithmic policing technologies may seem futuristic, they are inseparable from the past. Such technologies’ algorithms are generally trained on historical police data, and such data has embedded within it historical and ongoing patterns of systemic discrimination and colonialism in Canada’s criminal justice system. This includes, in particular, anti-Black racism, anti-Indigenous racism, and discrimination against racialized individuals and communities more broadly. The criminal justice system also involves systemic discrimination against other historically oppressed and ongoingly marginalized communities, such as the LGBTQ+ community, those who live with mental illness, and those who are socioeconomically disadvantaged through poverty or homelessness. This, too, is embedded in historical crime data, and thus embedded in algorithmic policing technologies that are built on top of such data. As a result, these communities are the most likely to be negatively impacted by the use of algorithmic policing technologies, and are at the greatest risk of having their constitutional and human rights violated or further violated.

Even if algorithmic policing technologies themselves are not biased, their use and application can exacerbate and amplify biased conduct among police officers and law enforcement. Such technologies equip law enforcement authorities with unprecedented capabilities of surveillance and profiling, which in turn can encourage or enable more frequent interference with the liberty, equality, privacy, free expression, and due process rights of members of communities that are already targeted or discriminated against by actors in the Canadian criminal justice system.

What do affected communities say about the use of these technologies?

Community representatives interviewed raised several concerns that closely overlap with the human rights issues associated with algorithmic policing as examined in this report, including algorithmic bias, the problems with police data, feedback loops entrenching systemic discrimination, data privacy, the chilling effect on racial justice and Indigenous rights activism, and exacerbating community distrust of law enforcement.

Above all, community representatives expressed the overriding concern that algorithmic policing tools would perpetuate systemic discrimination against marginalized communities while simultaneously masking the underlying systemic issues and root problems in the criminal justice system that cannot be fixed through technology.

Community representatives also viewed the use of algorithmic policing tools as inseparable from Canada’s history of colonialism and systemic discrimination against Indigenous, Black, and other racialized and marginalized groups. Interview responses often turned towards the role of this history and its continuing impacts today, including the historical function of law enforcement and associated implications for algorithmic policing.

Community representatives involved in racial justice advocacy further shared the view that algorithmic policing tools are merely continuations of pre-existing policing tools that have been used to justify state violence.

Those interviewed expressed doubt that algorithmic policing could benefit marginalized communities, with some citing missing and murdered Indigenous women and girls as an example. “I’ve never seen a dot map in my life for intimate partner violence. Was there a dot map for missing Indigenous women?”

Lastly, community representatives expressed concern regarding their lack of familiarity with emerging algorithmic policing techniques and, in doing so, raised issues of information asymmetry between policed communities on the one hand and law enforcement and police technology vendors on the other.

What do affected communities say about the use of these technologies?

We identified the most affected communities as those who have been historically and ongoingly impacted by systemic discrimination in the Canadian criminal justice system, with a focus on racial discrimination, particularly Black communities and Indigenous peoples. The involvement of such communities in this research occurred primarily through formal research interviews governed by a University of Toronto research ethics protocol. We interviewed legal practitioners, human rights advocates, community service providers, and racial justice activists and experts who are part of such communities and who are familiar with how the criminal justice system impacts and interacts with the circumstances and experiences of Black, Indigenous, and other racialized and marginalized individuals and groups in Canada.

How might the findings of how these technologies are currently used provide insight into how they might be used in the future?

Our overall finding is that Canadian law enforcement agencies have proceeded cautiously when it comes to predictive policing programs, but have proceeded with insufficient regard for human rights and constitutional rights in the case of adopting algorithmic surveillance technologies, especially when it comes to facial recognition in particular.

With respect to predictive policing technologies, it is not too late for Canada to get it right and implement the necessary legal and policy frameworks, oversight mechanisms, and best practices to ensure that such programs, if any are implemented at all, are less likely to pose a threat to human or constitutional rights, particularly those of historically and ongoingly marginalized groups. Governments, lawmakers, and law enforcement authorities at all levels (municipal, regional, provincial/territorial, and federal) have a role to play and must act now to establish human rights-centred governance over algorithmic policing technologies.

With respect to algorithmic surveillance technologies, some technologies that have already been in use must be immediately withdrawn and subjected to a moratorium, such as facial recognition technologies and algorithmic social media surveillance. Going forward, law enforcement authorities must dramatically increase transparency and early public disclosure regarding their use and consideration of such technologies before they are developed or deployed. Nothing less will ensure that such technologies are subject to necessary democratic debate and fully informed decisions around their use, including limits or bans ensuring that any such use is necessary and proportionate to legitimate legal objectives in the context of upholding human and constitutional rights.

What can policy- and lawmakers do to reverse or provide remedy to the use of these technologies?

In our report, we provide twenty recommendations to the federal and provincial governments, lawmakers, and law enforcement authorities to mitigate the risk to human and constitutional rights from the use of algorithmic policing technologies. Because Canada currently appears to have a low level of adoption of these technologies, most of our recommendations aim to prevent harm, rather than provide remedy for harm after the fact. Harm after the fact, in the context of a constitutional or human rights violation, may also be more appropriately addressed through pre-existing legal mechanisms, such as Charter claims. However, our legal analysis found that the lack of clear remedies for harm from algorithmic policing, after the fact, is precisely one of the problems. This means that it is even more important to get oversight mechanisms and preventative law and policies right, before algorithmic policing technology is used on members of the public.

Governments, lawmakers, and law enforcement agencies must also recognize and accept that in some cases, the problem may lie inherently within the technology itself. Under these circumstances, even the most careful use, involving the strictest adherence to the highest standard of best practices, can still result in significant harm, including human rights violations and disproportionately negative outcomes for members of historically marginalized communities who already face systemic discrimination from the criminal justice system.

The first several recommendations of the twenty are the most urgent and important, because they are threshold inquiries and policies that go to deciding if a certain type of algorithmic policing technology should even be used at all. Our top three immediate calls to action are:

- Law enforcement agencies must be fully transparent with the public and with privacy commissioners, immediately disclosing whether and what algorithmic policing technologies are currently being used, developed, or procured, to enable democratic dialogue and meaningful accountability and oversight.

- Governments must place moratoriums on law enforcement agencies’ use of technology that relies on algorithmic processing of historic mass police data sets, pending completion of a comprehensive review through a judicial inquiry, and on use of algorithmic policing technology that does not meet prerequisite conditions of reliability, necessity, and proportionality.

- The federal government should convene a judicial inquiry to conduct a comprehensive review regarding law enforcement agencies’ potential repurposing of historic police data sets for use in algorithmic policing technologies.